Building a Privacy-First AI Chatbot with LangChain

A comprehensive guide to creating a full-stack AI chatbot using LangChain, with a focus on privacy, customization, and developer experience.

In an era where AI-powered conversations are transforming how we interact with technology, building a chatbot that balances functionality with user privacy is more critical than ever. The open-source langchain-chatbot repository offers a compelling solution. This project leverages the LangChain framework to deliver a customizable, memory-enabled chatbot with robust privacy features and developer-friendly tools. Whether you're a developer exploring AI applications or a business seeking a conversational tool, this repo is worth a closer look. In this blog post, we'll dive into its key features, architecture, setup process, and more—complete with visuals to bring it to life.

What is langchain-chatbot?

At its core, langchain-chatbot is a full-stack chatbot implementation that uses the LangChain framework to connect with large language models (LLMs) like Vertex AI or OpenAI. What sets it apart is its focus on privacy, ease of use, and customization. It stores conversation history in MongoDB, anonymizes sensitive data with Microsoft Presidio, and provides tracing capabilities via LangSmith. Plus, it's built with a modern tech stack—Next.js for the frontend and FastAPI for the backend—making it a ready-to-deploy solution.

Why it matters: Chatbots often handle sensitive user data, and ensuring privacy while maintaining a seamless experience is a challenge. This repo tackles that head-on, making it an excellent choice for developers who value security and flexibility.

Key Features

1. LangChain-Powered LLM Integration

LangChain is a framework that simplifies building applications with LLMs. In this repo, it connects the chatbot to powerful models like Vertex AI or OpenAI via their APIs. This flexibility lets developers choose their preferred provider without major code changes.

Why it's cool: You're not locked into one LLM—experiment with different models to suit your needs.

2. Memory System with MongoDB

Ever chatted with a bot that forgets everything you just said? Not here. The chatbot uses MongoDB to store conversation history, enabling context-aware responses that feel more natural.

3. Customizable Personality

Want a chatbot that sounds like a friendly 30-year-old teacher or a witty teenager? You can tweak its personality by setting attributes like gender and age, tailoring the tone to your audience.

Use Case: Perfect for educational tools or customer service bots with a specific vibe.

4. Privacy with Microsoft Presidio

Privacy is a standout feature. The chatbot uses Microsoft Presidio to anonymize personally identifiable information (PII) before sending it to the LLM API. Think of it like redacting a sensitive document—your name becomes "[NAME]" to keep it safe.

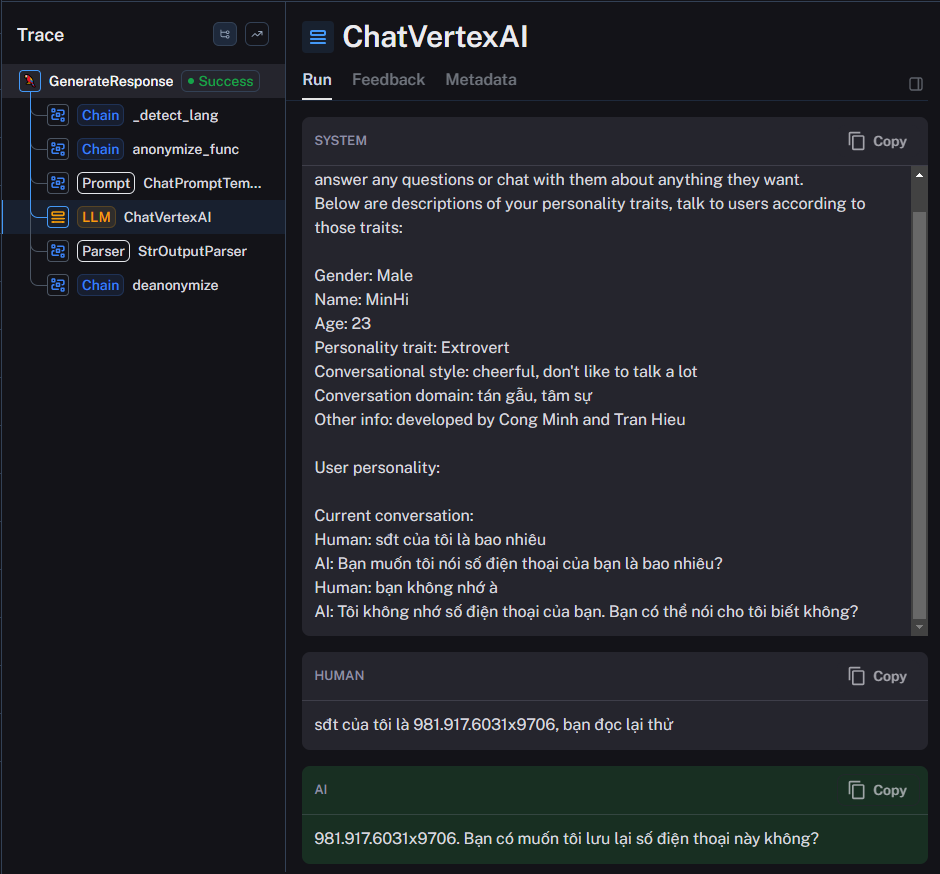

5. LangSmith Tracing

Debugging AI can be tricky, but LangSmith makes it easier. This tool traces how the chatbot processes inputs and generates outputs, giving developers a window into its behavior.

Why it's handy: Spot issues fast and optimize performance.LangSmith Documentation →

The Architecture

This isn't just a backend script—it's a full-stack app:

Frontend (Next.js)

A sleek, responsive interface built with Next.js handles user interactions. It's where you type your messages and see the bot reply.

Modern chat interface with message bubbles, input field at the bottom, and clean design. User messages appear on the right in primary color, while bot responses are on the left.

Backend (FastAPI)

The FastAPI-powered backend manages the logic—connecting to LangChain, MongoDB, and the LLM API. It's fast, modern, and perfect for handling API requests.

How It Works Together: User input goes from the frontend to the backend, gets anonymized, queries the LLM, and pulls context from MongoDB before sending a response back.

Complete Architecture Overview

┌─────────────────────────────────────────────────────────────────────────────┐ │ │ │ Docker Compose Environment │ │ │ │ ┌───────────────┐ ┌────────────────┐ ┌───────────────────┐ │ │ │ │ │ │ │ │ │ │ │ Next.js │◄─────►│ FastAPI │◄──────►│ MongoDB │ │ │ │ Frontend │ │ Backend │ │ Database │ │ │ │ (Port 3000) │ │ (Port 8080) │ │ (Port 27017) │ │ │ │ │ │ │ │ │ │ │ └───────────────┘ └────────┬───────┘ └───────────────────┘ │ │ │ │ │ ▼ │ │ ┌─────────────────┐ ┌──────────────────┐ │ │ │ │ │ │ │ │ │ LangChain │─────►│ LangSmith │ │ │ │ Framework │ │ (Tracing) │ │ │ │ │ │ │ │ │ └────────┬────────┘ └──────────────────┘ │ │ │ │ │ ▼ │ │ ┌─────────────────┐ ┌──────────────────┐ │ │ │ │ │ │ │ │ │ LLM Provider │ │ Presidio │ │ │ │ (OpenAI/Vertex) │ │ Anonymizer │ │ │ │ │ │ (PII Protection)│ │ │ └─────────────────┘ └──────────────────┘ │ │ │ └─────────────────────────────────────────────────────────────────────────────┘

Comprehensive architecture diagram of the langchain-chatbot system.

Here's a peek at how the backend might set up the LangChain chain (simplified for illustration):

# Import the required libraries from langchain.chat_models import ChatOpenAI from langchain.chains import ConversationChain # Initialize the language model with your API key llm = ChatOpenAI(api_key="your-openai-key") # Create a conversation chain conversation = ConversationChain(llm=llm) # Generate a response response = conversation.predict(input="Hello, how can I assist you today?")

Getting Started

Ready to try it? Here's how to get langchain-chatbot running:

Prerequisites:

- Python 3.9+

- Node.js (for the frontend)

- API keys for Vertex AI or OpenAI

- MongoDB (local or cloud instance)

# Clone the repository git clone https://github.com/minhbtrc/langchain-chatbot.git # Navigate to the project directory cd langchain-chatbot

# Create a virtual environment python -m venv venv # Activate the virtual environment # On Windows venv\Scripts\activate # On macOS/Linux source venv/bin/activate # Install dependencies pip install -r requirements.txt

Create a .env file in the project root with:

OPENAI_API_KEY=your_openai_api_key MONGODB_URI=your_mongodb_connection_string ANONYMIZATION_KEY=your_encryption_secret_key

Standard Method

# Run the application python app.py # The app will be available at http://localhost:5000

Docker Option

# Build and run using Docker docker-compose up --build # The app will be available at http://localhost:5000

Note: If using LangSmith, add tracing variables like LANGCHAIN_TRACING_V2=true in backend/.env.

Privacy Features in Action

In 2025, data privacy isn't optional—it's essential. By integrating Microsoft Presidio, langchain-chatbot ensures that sensitive info like names or emails is masked before leaving your system. This is a game-changer for industries like healthcare or finance, where compliance is non-negotiable.

Implementing Anonymization

Here's how the anonymization layer is implemented in our solution:

from langchain.callbacks import BaseCallbackHandler

from cryptography.fernet import Fernet

import re

import os

class AnonymizationHandler(BaseCallbackHandler):

def __init__(self, encryption_key):

self.cipher = Fernet(encryption_key)

self.pii_patterns = {

'email': '\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}\b',

'phone': '\b(\+\d{1,2}\s)?\(?\d{3}\)?[\s.-]\d{3}[\s.-]\d{4}\b',

'ssn': '\b\d{3}-\d{2}-\d{4}\b',

'credit_card': '\b\d{4}[\s-]?\d{4}[\s-]?\d{4}[\s-]?\d{4}\b'

}

self.anonymized_data = {}

def anonymize_text(self, text):

for pii_type, pattern in self.pii_patterns.items():

for match in re.finditer(pattern, text):

original = match.group(0)

if original not in self.anonymized_data:

# Encrypt and store original data

encrypted = self.cipher.encrypt(original.encode()).decode()

self.anonymized_data[original] = f"[{pii_type.upper()}_{len(self.anonymized_data)+1}]"

# Replace in text

text = text.replace(original, self.anonymized_data[original])

return text

def deanonymize_text(self, text):

# Reverse the anonymization

for original, anonymized in self.anonymized_data.items():

text = text.replace(anonymized, original)

return textUsing the Anonymization Handler

# Initialize the handler with your encryption key

handler = AnonymizationHandler(os.getenv("ANONYMIZATION_KEY"))

# Setup the chain with callbacks

llm = ChatOpenAI(

api_key=os.getenv("OPENAI_API_KEY"),

callbacks=[handler]

)

# The handler will automatically process inputs and outputs

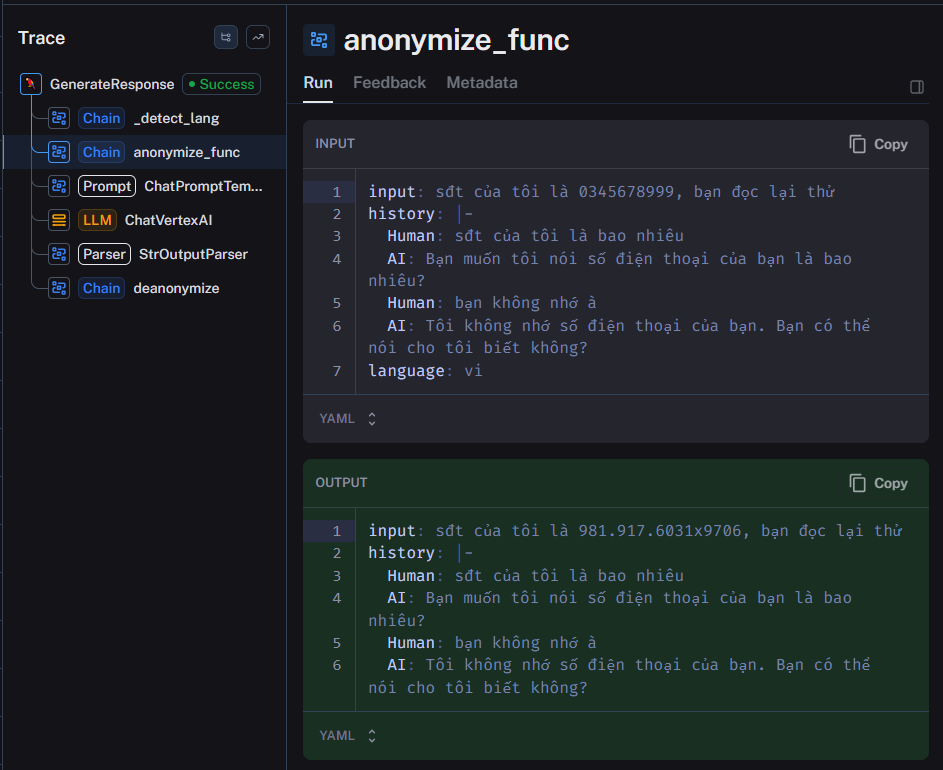

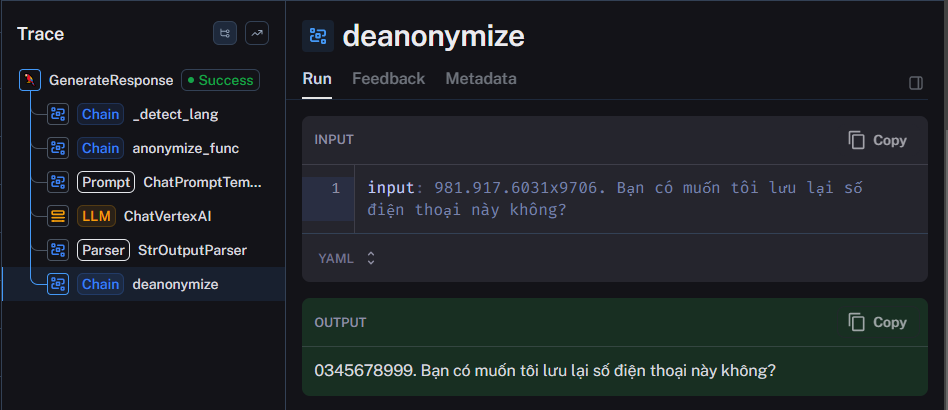

chain = ConversationChain(llm=llm)Anonymization in Practice

Before Anonymization

After Anonymization

Why Privacy Matters

In 2025, data privacy isn't optional—it's essential. By integrating Microsoft Presidio, langchain-chatbot ensures that sensitive info like names or emails is masked before leaving your system. This is a game-changer for industries like healthcare or finance, where compliance is non-negotiable.

De-anonymized Output Example - Showing how anonymized data can be restored for context.

Who's It For?

This repo shines for:

Experiment with LangChain and LLMs in a pre-built application. Ideal for learning how to implement privacy-focused AI systems.

Deploy a customer support bot with memory and customizable personality. Perfect for regulated industries needing secure AI solutions.

Tinker with a privacy-first chatbot for personal projects. Great for exploring LangChain capabilities without building from scratch.

Conclusion

The langchain-chatbot repository is a gem for anyone looking to build a smart, secure, and user-friendly chatbot. Its blend of LangChain's power, MongoDB's memory, Presidio's privacy, and a full-stack design makes it both practical and innovative. Whether you're enhancing a business or exploring AI, this project offers a solid starting point.

Ready to dive in? Visit the GitHub repo, clone it, and give it a spin. Have ideas to improve it? It's open-source—jump in and contribute!